In the realm of data analysis and manipulation, SQL (Structured Query Language) stands as a cornerstone for interacting with relational databases. However, crafting SQL queries can sometimes be daunting, especially for those less familiar with the language. Enter SQLCoder2-7B, a cutting-edge model that leverages the power of natural language processing (NLP) to generate SQL queries from plain text. In this guide, we’ll explore how to utilize SQLCoder2-7B with the Yelp dataset, diving into the implementation details using llama_cpp and a compatible gguf model from Hugging Face.

Understanding SQLCoder2-7b

SQLCoder is a state-of-the-art NLP model designed specifically for generating SQL queries from natural language prompts. It is built upon advanced deep learning architectures and trained on vast datasets, enabling it to comprehend complex queries and produce accurate SQL statements. Click here to know more.

Preparing the Yelp Dataset

The Yelp dataset serves as an excellent playground for exploring SQLCoder2 7B model capabilities. This dataset contains a wealth of information, including business details, user reviews, and geographical data. I have used PostgreSQL database to store the yelp data. For this example, I am using only the reviews and business tables.

CREATE TABLE IF NOT EXISTS public.business

(

business_id character varying(50) COLLATE pg_catalog."default" NOT NULL,

name character varying(255) COLLATE pg_catalog."default",

address character varying(255) COLLATE pg_catalog."default",

city character varying(100) COLLATE pg_catalog."default",

state character(10) COLLATE pg_catalog."default",

postal_code character varying(20) COLLATE pg_catalog."default",

latitude numeric,

longitude numeric,

stars double precision,

review_count integer,

is_open integer,

attributes jsonb,

categories character varying(1000) COLLATE pg_catalog."default",

hours jsonb,

CONSTRAINT business_pkey PRIMARY KEY (business_id)

)

CREATE TABLE IF NOT EXISTS public.review

(

review_id character varying(50) COLLATE pg_catalog."default" NOT NULL,

user_id character varying(50) COLLATE pg_catalog."default",

business_id character varying(50) COLLATE pg_catalog."default",

stars integer,

useful integer,

funny integer,

cool integer,

review_text text COLLATE pg_catalog."default",

date timestamp without time zone,

CONSTRAINT review_pkey PRIMARY KEY (review_id)

)

Setting Up llama_cpp_python and gguf Model

To run SQLCoder2-7b effectively, we’ll utilize llama_cpp_python, a versatile tool for executing NLP models. Additionally, we’ll acquire a compatible gguf model from Hugging Face’s model repository, ensuring seamless integration with llama_cpp. You can download hugging face models into your local machine using the following Python code:

from huggingface_hub import snapshot_download

model_path = snapshot_download(repo_id="defog/sqlcoder-7b-2",repo_type="model", local_dir="../models/sqlcoder-7b-2", local_dir_use_symlinks=False)

Once the model is downloaded, you can find the GGUF file which is compatible with llama_cpp_python. To download and install llama-cpp-python, please follow this link.

Generating SQL Queries with SQLCoder2-7B

With the setup complete, it’s time to put SQLCoder 7b 2 to the test. It’s a three step process.

Step-1: You need to provide metadata to the model explaining your table structure. Below is the sample metadata I used for Yelp dataset. Let’s call it metadata.sql.

CREATE TABLE business (

business_id VARCHAR(50) PRIMARY KEY, -- Unique ID for each business

name VARCHAR(255), -- Name of the business

address VARCHAR(255), -- address of the business

city VARCHAR(100), -- city where the business is located

state VARCHAR(10), -- state with two letter US code where the business is located

postal_code VARCHAR(20), -- US zip code where the business is located

latitude DECIMAL(10,7), -- latitude coordinates of the business location

longitude DECIMAL(10,7), -- longitude coordinates of the business location

stars DECIMAL(2,1), -- number of star rating for the business

review_count INTEGER, -- total number of reviews for the business

is_open INTEGER, -- is the business open

attributes JSONB, -- Json formatted attributes of the business

categories VARCHAR(1000), -- categories that business belongs to

hours JSONB -- business open hours in a week grouped by day

);

CREATE TABLE (

review_id VARCHAR(50) PRIMARY KEY, -- Unique ID for each review

user_id VARCHAR(50), -- user ID of the user who wrote this review

business_id VARCHAR(50) -- business ID of the business on which this review was written

stars INTEGER, -- total stars given for this review

useful INTEGER, -- is this review useful, 1 for useful 0 for not useful

funny INTEGER, -- is this review funny, 1 for funny 0 for not funny

cool integer, -- is this review cool, 1 for cool 0 for not cool

review_text TEXT, -- review text which contains the original review given by user

date DATE -- Date on which this review was given

);

-- business.business_id can be joined with review.business_id

Step-2: You need a prompt template where you provide instructions, metadata, question/answer format. Let’s call it prompt.md.

### Task

Generate a SQL query to answer [QUESTION]{user_question}[/QUESTION]

### Instructions

- If you cannot answer the question with the available database schema, return 'I do not know'

### Database Schema

The query will run on a database with the following schema:

{table_metadata_string}

### Answer

Given the database schema, here is the SQL query that answers [QUESTION]{user_question}[/QUESTION]

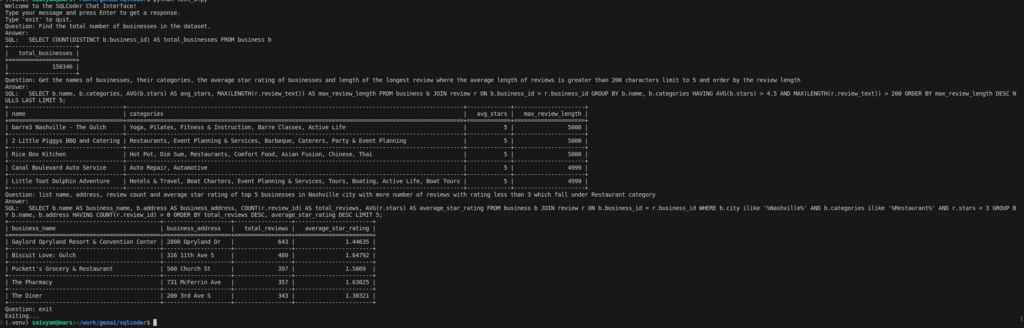

[SQL]Step-3: I created a chat like interface in command line. Now run the model and talk to your database. Below is the screenshot of the conversations with the SQLCoder2 7B model:

The generated SQL queries executed successfully on PostgreSQL database. It handles column names, join and where clauses effectively.

Let’s Jump into the code

Let’s look into the code blocks. Before that we need to install the required packages.

$ pip install llama-cpp-python psycopg2-binary tabulateLoad the model using Llama Python package.

from llama_cpp import Llama

import psycopg2

from tabulate import tabulate

LLM = Llama(model_path="../models/sqlcoder-7b-2/sqlcoder-7b-q5_k_m.gguf", n_gpu_layers=10, n_ctx=2048, verbose=False)Connect to PostgreSQL database. I have used the default configurations:

conn = psycopg2.connect(

dbname="yelp",

user="postgres",

password="postgres",

host="localhost",

port="5432"

)

cur = conn.cursor()Now we need a method to generate the prompt. For this method we use the metadata.sql and prompt.md files defined in the previous section.

def generate_prompt(question, prompt_file="prompt.md", metadata_file="metadata.sql"):

with open(prompt_file, "r") as f:

prompt = f.read()

with open(metadata_file, "r") as f:

table_metadata_string = f.read()

prompt = prompt.format(

user_question=question, table_metadata_string=table_metadata_string

)

return promptNow, we will send the prompt to the model and get the generated SQL and execute it on the PostgreSQL connection.

def query_model(question):

prompt = generate_prompt(question)

output = LLM(

prompt,

max_tokens=200, # Generate up to 32 tokens

stop=["Q:", "\n"],

echo=True # Echo the prompt back in the output

)

sql_query = output['choices'][0]['text'].split('[SQL]')[1]

print("SQL: ", sql_query)

return execute_query(sql_query)

def execute_query(query):

cur.execute(query)

rows = cur.fetchall()

headers = [desc[0] for desc in cur.description]

return tabulate(rows, headers=headers, tablefmt="grid")The final main function

def main():

print("Welcome to the SQLCoder Chat Interface!")

print("Type your message and press Enter to get a response.")

print("Type 'exit' to quit.")

while True:

user_input = input("Question: ")

if user_input.lower() == 'exit':

print("Exiting...")

cur.close()

conn.close()

break

print("Answer: ")

response = query_model(user_input)

print(response)

if __name__ == "__main__":

main()Conclusion

SQLCoder2 7b represents a significant advancement in the realm of NLP-driven SQL generation, offering a powerful tool for simplifying database interactions. By leveraging the capabilities of llama_cpp and a compatible gguf model from Hugging Face, users can seamlessly integrate SQLCoder2 7b into their data analysis workflows. Whether you’re a seasoned SQL expert or a newcomer to database querying, SQLCoder2 7b provides an intuitive and efficient solution for transforming text into SQL queries.