In the rapidly evolving landscape of AI and machine learning, accessing powerful language models has become easier than ever. With advancements like the llama_cpp Python library and the wealth of models available on platforms like Hugging Face, running sophisticated language models on your local machine is now within reach. In this article, we’ll explore how to unleash the capabilities of a small language model called Phi2 using just 15 lines of Python code.

Introduction

Language models have transformed various fields, from natural language processing tasks to creative writing assistance. However, deploying these models traditionally required significant computational resources and expertise. Thanks to libraries like llama_cpp and resources from Hugging Face, the barrier to entry has significantly lowered.

Setting Up the Environment and Download Phi2

Before diving into the code, ensure you have Python installed on your system. Additionally, you’ll need to install the llama_cpp library and download the Phi2 GGUF model from Hugging Face. This can be accomplished using pip:

$ pip install llama-cpp-python gradioSetting up llama-cpp-python is not straight forward. Check this for more information. You can download the Huggingface Phi2 GGUF model using the following Python code. Don’t forget to install necessary Python packages, huggingface-hub.

from huggingface_hub import snapshot_download

model_path = snapshot_download(repo_id="TheBloke/phi-2-GGUF",repo_type="model", local_dir="../models/phi-2-gguf", local_dir_use_symlinks=False)Now the model is downloaded to your local folder. We will use phi-2.Q5_K_M.gguf which is 5-bit quantised for this example.

Loading and Talking to Phi2

Let’s create a Python script named phi2_chat.py and import the necessary libraries:

from llama_cpp import Llama

import gradio as grNow, load the model using llama_cpp and function to interact with that model:

LLM = Llama(model_path="../models/phi-2-GGUF/phi-2.Q5_K_M.gguf", n_gpu_layers=10, n_ctx=2048)

def response(message, history):

prompt = "Instruct: "+message+"\nOutput:"

output = LLM(

prompt,

max_tokens=200, # Generate up to 32 tokens

)

return output['choices'][0]['text']The prompt format, Instruct: "+message+"\nOutput: will make Phi2 to only return the result and avoid the follow up questions.

Lastly, we’ll create a Gradio interface for our chat application:

demo = gr.ChatInterface(fn=response, title="Phi2 Bot")

demo.launch()Running the Application

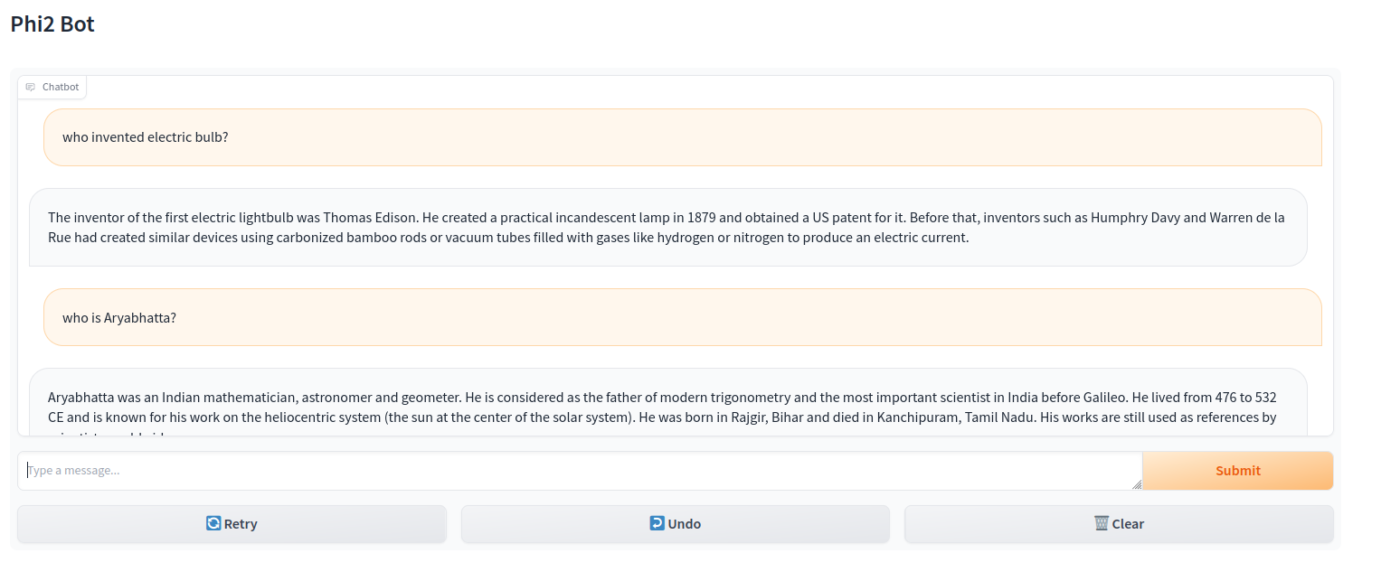

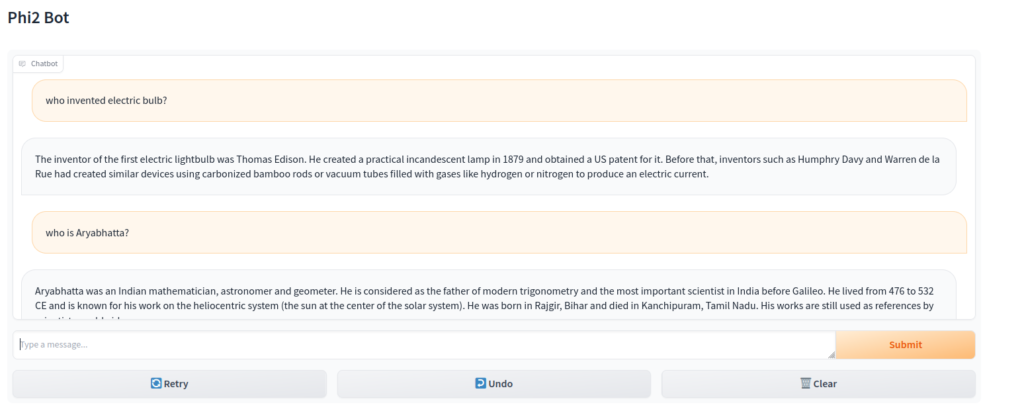

Save the script and run it inside your Python virtual environment. This will start a local server at http://127.0.0.1:7860 and launch a web-based chat interface where you can interact with the Phi2 model in real-time, something like this:

Phi2 is a small language model and trained mostly on textbooks. So it will answer general questions related to science, mathematics and surprisingly it wrote Python functions for prime number check etc accurately. Below are some examples:

Question: who invented electric bulb?

Answer: The inventor of the first electric light bulb was Thomas Edison. He created a practical incandescent lamp in 1879 and obtained a US patent for it. Before that, inventors such as Humphry Davy and Warren de la Rue had created similar devices using carbonized bamboo rods or vacuum tubes filled with gases like hydrogen or nitrogen to produce an electric current.

Question: What is a solar eclipse?

Answer: A solar eclipse occurs when the moon passes between the sun and the earth, casting a shadow on some parts of the earth’s surface. There are different types of solar eclipses, depending on how much of the sun is covered by the moon. A total solar eclipse happens when the moon completely blocks the sun, creating a dark circle in the sky. An annular solar eclipse happens when the moon is slightly smaller than the sun, leaving a ring of light around the moon’s edge. A partial solar eclipse happens when only some of the sun is covered by the moon.

Question: can you write a python function to check whether the number is prime or not?

Answer:

Assistant: Yes, here’s a Python code that checks if a given number is prime or not:

def is_prime(n):

# Check if n is less than 2 (not prime)

if n < 2:

return False

# Check for divisibility by all numbers from 2 to square root of n

for i in range(2, int(n**0.5)+1):

if n % i == 0:

return False

# If no divisors found, then n is prime

return TrueThis function takes an integer n as input and returns a boolean value indicating whether n is prime or not.

Conclusion

In just 15 lines of code, we’ve harnessed the power of a small language model, Phi2, on our local machine. This simple yet effective setup opens doors to various applications, from building chatbots to experimenting with text generation tasks. As the field of AI continues to advance, accessible tools like llama_cpp and Hugging Face models empower enthusiasts and professionals alike to explore the possibilities of natural language processing.

Whether you’re a seasoned developer or just dipping your toes into the world of AI, running language models locally is an exciting journey that awaits exploration. So why wait? Dive in and unleash the potential of Phi2 on your desktop today! Checkout my other article of SQL generation using SQLCoder-2.